During this 4 weeks I completed my pending work from the phase 1 i.e. implementing matrix solver and start working on my phase 2 work. In this period I made PR for cgs solver, improved lsmr and also added some distribution methods to Generator.

Fixing the larger number of kernel invocation in LSMR algorithm.

In the previous post I wrote about various method I used to solve the problem regarding the number of individual kernel calls which is causing very bad performance as compared to that of SciPy version, but this time my mentor suggested that we should use try to limit the matrix and vector computation on GPU and other scalar arithmetic on CPU. And BAM!! this works and the there was very huge performance improvement. You can see the comparision of time taken for computing the solution vector in the following table, where we can see that as the size of array increase gap between computational time required by scipy and cupy widens.

| size of matrix | SciPy | CuPy |

|---|---|---|

| 500x500 | 53.843938446044916 | 287.2928771972656 |

| 1000x1000 | 130.22197265625 | 601.302734375 |

| 5000x5000 | 4808.26650390625 | 2992.645263671875 |

| 10000x10000 | 20126.594335937505 | 3263.73974609375 |

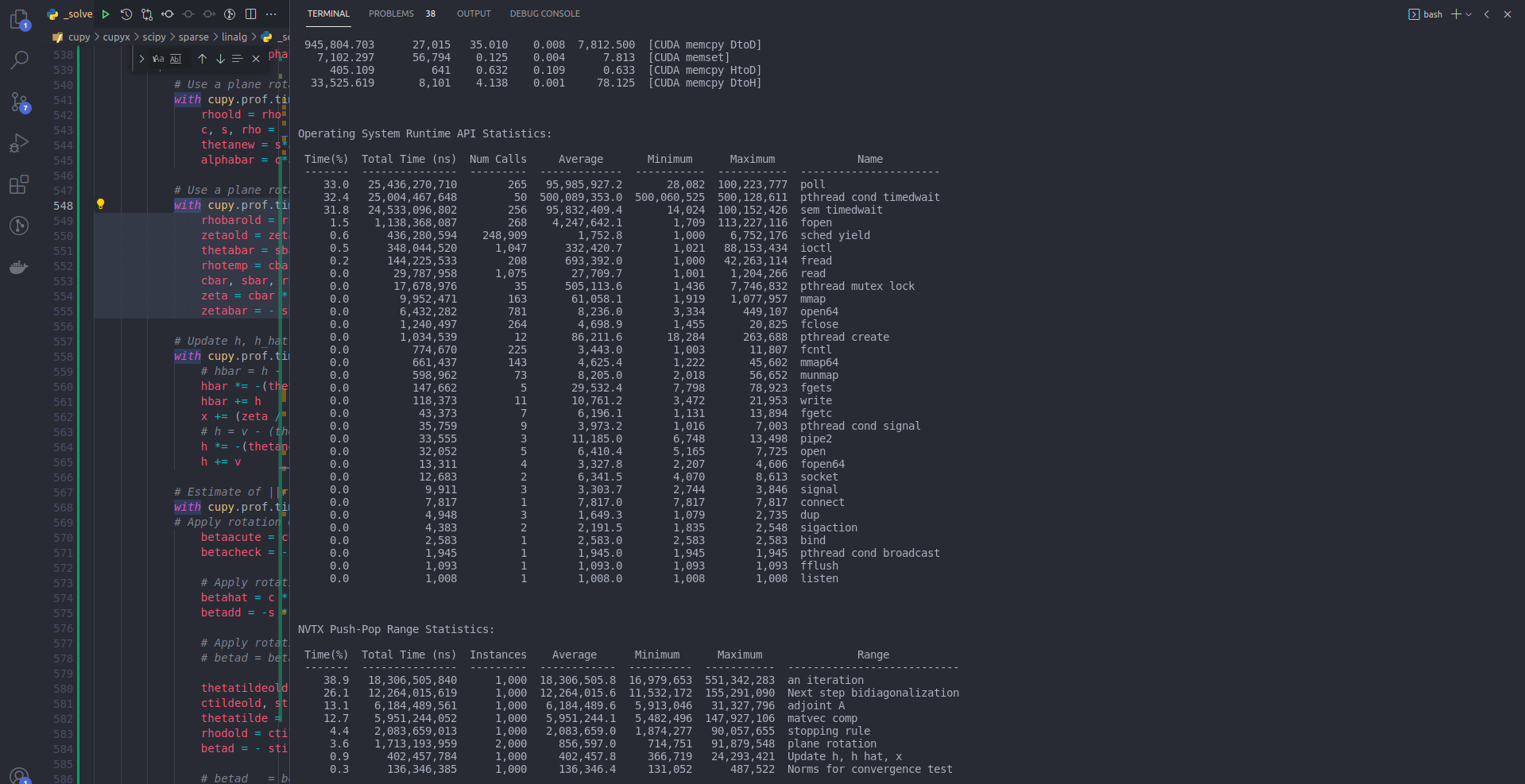

And looking at the profiler we can see that the number of kernel invocation was reduced

How we fix that

The main problem in the implementation was that all the calclation which involves vector are done on GPU even operation like value comparision, maxima and minima and so on. So what we do here was we just call the variable in GPU onto the CPU and used that for doing less extensive operation. This was done using item method which converts the array with single term into scalar. This reduces the large number of independent kernel call which increases the performance of the algorithm.

CGS solver

Conjugate Gradient Squared is varient of biconjugate gradient descent method and has same number of operation per iteration, but this method does not involve computation with AT. Thus reduce computation where calculation of AT is computationally costly.

Following is the pseudo code of preconditioned Conjugate Gradient Squared Method

Compute r (0) = b − Ax (0) for some initial guess x (0)

Choose r̃ (for example, r̃ = r (0) )

for i = 1, 2, . . .

ρi−1 = r̃T r(i−1)

if ρi−1 = 0 method fails

if i = 1

u(1) = r(0)

p(1) = u(1)

else

βi−1 = ρi−1 /ρi−2

u(i) = r(i−1) + βi−1 q(i−1)

p(i) = u(i) + βi−1 (q(i−1) + βi−1 p(i−1) )

endif

solve Mp̂ = p(i)

v̂ = Ap̂

αi = ρi−1 /r̃T v̂

q(i) = u(i) − αi v̂

solve M û = u(i) + q(i)

x(i) = x(i−1) + αi û

q̂ = Aû

r(i) = r(i−1) − αi q̂

check convergence; continue if necessary

end

Above algorithm for CGS solver over cupy has very huge performance improvement compared to SciPy. As you can see the result in following table. As the size of matrix increases time required to compute the solution decreases by very huge amount. For matrix of size 5000x5000 there is nearly 4x improvement in the time required to get the solution.

| size of matrix | SciPy | CuPy |

|---|---|---|

| 100x100 | 47.81260681152344 | 162.54141235351562 |

| 500x500 | 277.829833984375 | 812.6845703125001 |

| 1000x1000 | 706.6454467773438 | 1627.8208618164062 |

| 3000x3000 | 8468.41064453125 | 4872.149169921875 |

| 5000x5000 | 33556.39062500001 | 8166.427872492932 |

Along with these two solver I am working on two more solver MINRES and BiCG.

Distribution in new random Generator

During period I made PR for binomial, geometric, hypergeometric distribution and also made PR for improvement in the beta distribution. Of all the distribution I am successful in getting geometric and hypergeometric distribution merged onto the main repo. And in the upcoming week I will add more distribution to the generator. I’ll write seperate post describing the random number generator and and how that was implemented in CuPy using the cuRAND library provideed by CUDA.

Following is the example of the random number generated by using the new added distributions

>>>import cupy as cp

>>>ngood = cp.random.randint(low=5, high=10, size=(5))

>>>nbad = cp.random.randint(low=5, high=10, size=(5))

>>>nsample = cp.random.randint(low=1, high=10, size=(5))

>>>rng = cp.random.default_rng()

>>>rng.hypergeometric(ngood, nbad, nsample)

array([4, 5, 1, 2, 3])

>>>p = cp.random.random(10)

>>>rng.geometric(p)

array([1, 1, 1, 3, 3, 1, 1, 2, 1, 6])

And all these distribution has very huge performance improvement as compared to NumPy.

Tasks for upcoming weeks

In upcoming weeks I planned to added more disritbution and complete my two more solver i.e. MINRES and BiCG and also get my binomial distribution merged.